"Animatronic Humanoid Robot Project", which this page introduces, was funded by Project for the Practical Application of Next-Generation Robots in The 21st Century Robot Challenge Program, New Energy and Industrial Technology Developemnt Organization (NEDO), and presented by Automatic Control Lab., Dpt. of Mechano-Informatics, Univ. of Tokyo. The result had been demonstrated for 11 days fron Jun. 9 to 19, 2005 in Prototype Robot Exhibition, EXPO2005 in Aichi, Japan.

A recent focus of humanoid robot study is shifting from a phase that studies specific individual movements to a coordinated system that orchestrates the whole body movement, i.e., a study of a system that enables robots to move in all kinds of manners.

Humanoid control is one of the most daunting problems in the robotics field that necessitates coordination of tens of articulated bodies under dynamical phenomena such as collision and contact in the environment with various disturbances. Moreover, an evolution from "action" to "behavior" requires a collaboration of the motion control and one step further intelligent information processing. Human-like lively motion synthesis is achieved through the union of those even as individually challenging technologies.

Although it is a primary attempt, this project realized a complete humanoid robot system in the sense that it

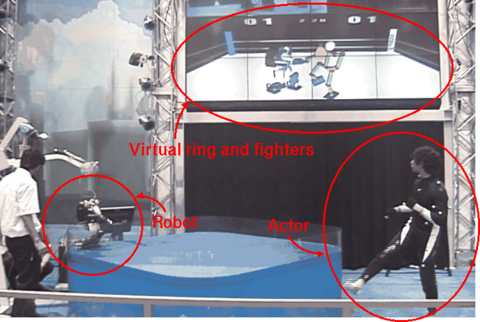

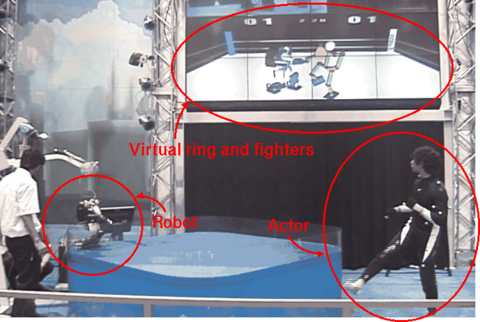

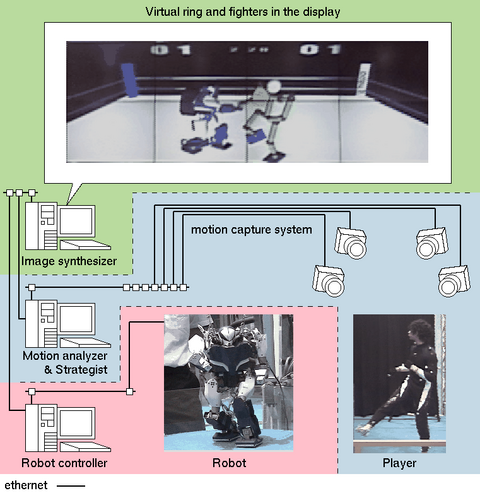

The system consists of three parts, namely, 1) motion analyzer & strategist, 2) robot controller and 3) image synthesizer as the above figure illustrates.

The robot and the human player confront each other with a distance. The player's action is measured by the motion capture system using cameras located in the field. Based on the mimetic communication model, the strategist recognizes the player's "will" and decides a strategy of the robot in realtime. The corresponding action commands are sent to the robot controller. Those commands only include "apparent" movements of the whole body, possibly inconsistent with physical laws. Online motion retouch modifies them to physically consistent ones and apply them to the real robot. Finally, the image synthesizer composes the virtual fighting from the resultant motion of the robot and the measured motion of the player.

The game goes along in the synthesized world. Collision between the two is detected on those virtual charactors in terms of geometry, not emulating the physical impacts back to the real human and robot.

MAC3D system by Motion Analysis was adopted as the motion capture system. 10 cameras on the ceiling play a role of the robot's eyes and measures the position of the retroreflective markers on the suit which the player puts on. Measured positions of the markers are labeled by a bundled software, and then are converted to a set of joint angles by a fast inverse kinematics developed by us in realtime. As the result, the player's motion patterns are recognized as posture sequences.

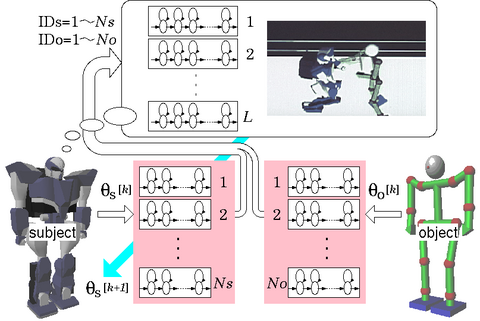

Mimesis theory propounded by Donald is that repetitive process of mimicry, namely, recognization and remapping of other's behaviors to the own body cultivates an intelligent mechanism which symbolizes behaviors and associates various behaviors from those symbols. It inspired us to develop "mimesis loop" framework in which motion symbolization and association is achieved by a stochastic algorithm using Hidden Markov Model(HMM).

"Mimesis loop" had conventionally processed motions of only one robot. We augmented it to learn interaction patterns between multiple humans, hierarchically linking loops. It also functions as an online decision-maker, choosing the behavior strategy of the robot itself according to the other's behavior and the accumulated interaction patterns. As the result, primitive communications between humand and robots are created.

Various interaction patterns between two persons who are fighting are accumulated in advance, such as: "sway rightward if the opponent attacks with a straight right hand. Then, counterattack him during his resetting motion." (Of cource, patterns aren't memorized in such logical forms but in sequences of sets of the combined motions.) The robot makes decisions in actual stages on this database.

The decision maker in the previous section outputs the command sequence of the desired posture and supporting-status every 30[ms]. They are not only rough in terms of both time and space, but also are possibly inconsistent with physical law. That is, it occasionally demands that the robot generate attracting force from the ground, which is impossible. Online motion retouch modifies those rough commands to ones applicable for the real robot. It works in online, while the previous similar motion modification techniques are all in offline.

The method stores the successively commanded sequences, segments them when the changeover of the desired supporting condition is recognized, and computes a function set of motion reference from the segment in accordance with ZMP criterion. The function set includes arm posture, position and orientation of feet, COG position and attitude of the trunk. Though this batch operation causes a latency in principle, it works completely in online, reflecting the initial condition of the robot at each beginning of the segment. In addition, it is quantized-period invariant since all those trajectories are designed as continuous functions.

See this page for more information about UT-mu2.

Experimental operation of UT-mu2(MPEG,14MB)

A demonstration in EXPO2005(MOV,53MB,download QuickTime to see the movie)

Synthesized virtual fighters and ring(MPEG,2.0MB)

Virtual fight(MPEG,8.9MB)

Virtual fight(again)(MPEG,3.7MB)

Director: Prof. Yoshihiko Nakamura

Technical director: Prof. Katsu Yamane

Mechanical Design: Kou Yamamoto and Tomomichi Sugihara

Programming: Tomomichi Sugihara, Wataru Takano and Katsu Yamane

Assistant: Masaya Oishi and Kazuhiro Okawa

Cast: Tatsuhito Aono(Sticky the Barbarian), Kou Yamamoto(Referee) and Yoshihiko Nakamura(Dr. Nakamura)

UT-mu2(magnum the darkside)

Action: Wataru Takano, Tatsuhito Aono and Shuji Suzuki

Cooperation: nac Image Technology Inc.,

Motion Analysis Corporation

Special Thanks: EDGEWEAR(BGM), Kazumi Suwa(MC)

and all YNL members

Sponsor: New Energy and Industrial Technology Development Organization (NEDO)